|

Eager Space | Videos by Alpha | Videos by Date | All Video Text | Support | Community | About |

|---|

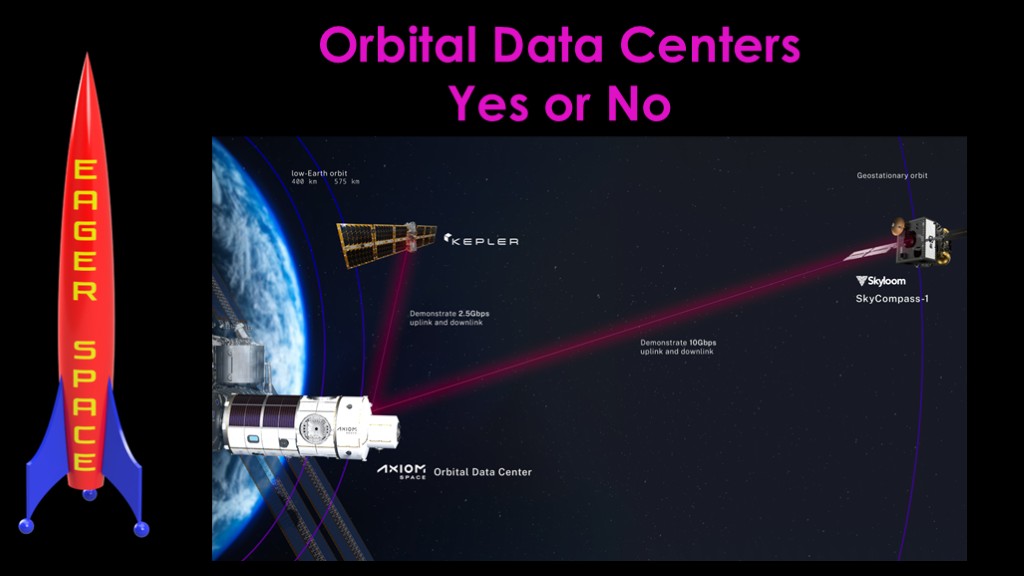

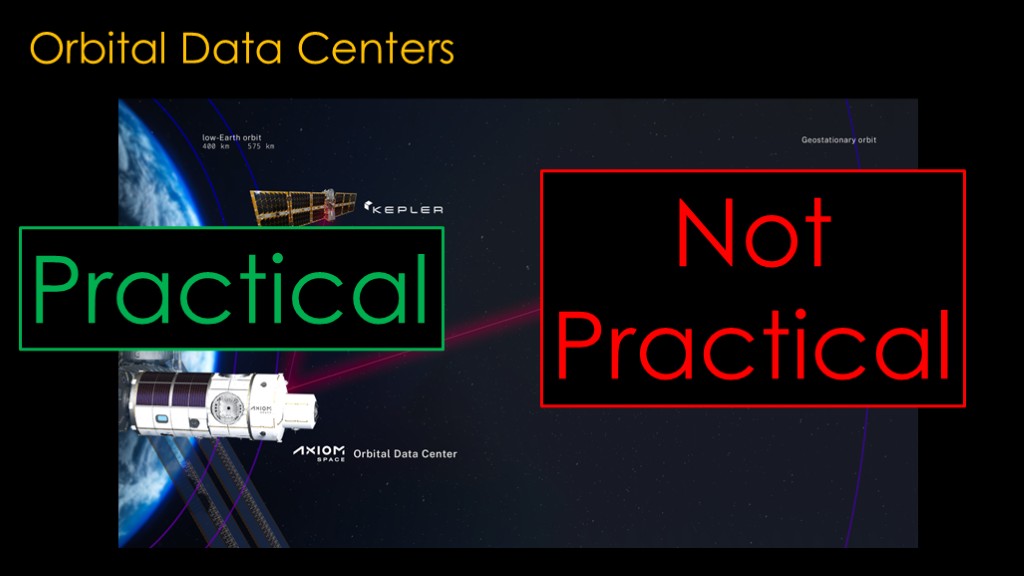

There has been a lot of talk about orbital data centers recently. Instead of collecting solar energy in space to send back to earth, the idea is to move the energy consuming computer server data centers off the planet into orbit and just move data around, something we know how to do.

It's an interesting idea, but what's not clear is whether it is actually a practical idea.

We're going to go through what it will take to build a data center in low earth orbit, like the one that Axiom is proposing here.

What's your prediction? Practical, or not practical?

I'm going to make a lot of assumptions along the way. Feel free to choose your own numbers if you think that I'm off target.

Let's start at the small end, and I'm going to arbitrarily pick 250 servers as the target. In a terrestrial data center, that would take up around 6 racks of space, and many companies have their own data centers of this size.

The big companies have much larger data centers - these are the Microsoft Data Centers in Quincy, Washington. You'll need to squint to pick out cars in the parking lots.

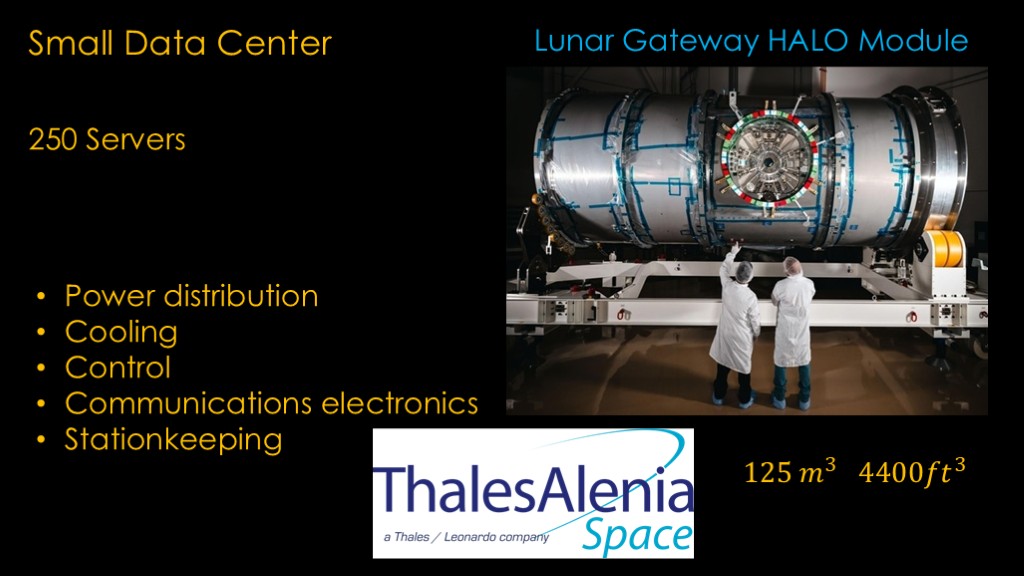

We'll need some space for our servers. I don't know exactly how much space to allocate, so I'm just going to ask the European Thales Alenia folks to build us a module like the lunar gateway HALO module.

That gives us 125 cubic meters or 4400 cubic feet of space to use..

That seems like a lot of space for 250 servers, but we do need space for power distribution, cooling, control systems, and communications electronics. We also need stationkeeping and alignment to keep us in the right location and pointed in the correct direciton.

We are now going to need to power our servers.

I'm going to pick 500 watts of power use per server, and that puts the overall power requirement at 125 kilowatts.

There will be inefficiency in power conversion, there will be other systems using power including ground communication, and solar panels will degrade over time.

And it's good to have some headroom in any design. I'm going to double the power requirement up to 250 kilowatts, and that will be our solar power target.

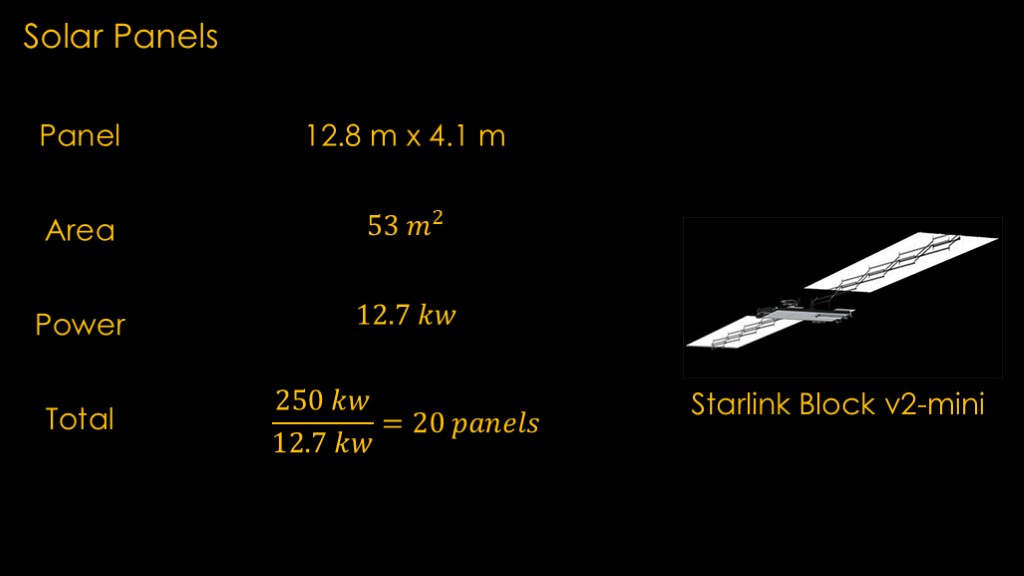

We will need panels that can easily be produced in high volume and are reasonably cheap, and an easy choice is the panels used on the starlink block 2 mini that is currently being launched by Falcon 9.

Each panel is 12.8 meters by 4.1 meters and has a surface area of 53 square meters.

With quality solar cells, each square meter will produce about 240 watts, or 12.7 kilowatts for each array.

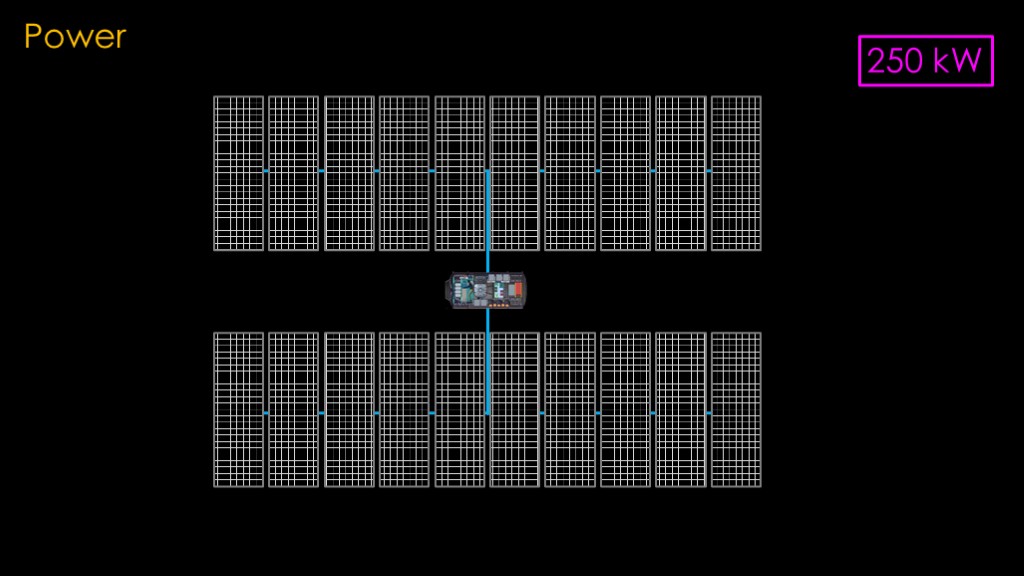

We need 20 panels to generate the 250 kilowatts that we need.

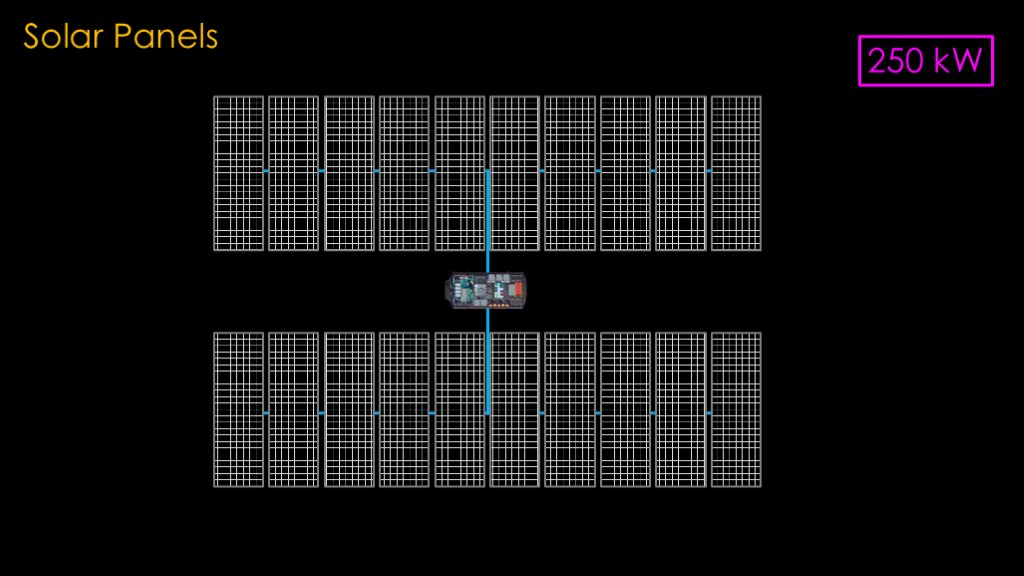

Here's what that looks like. Our little module is surrounded by a swarm of solar arrays. Looks a little bit silly, but servers are very power hungry.

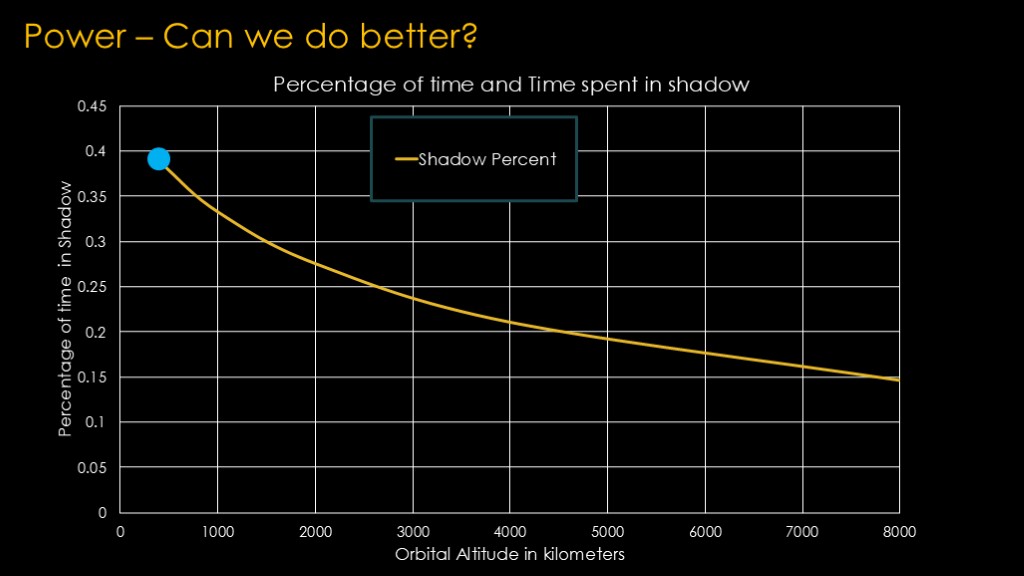

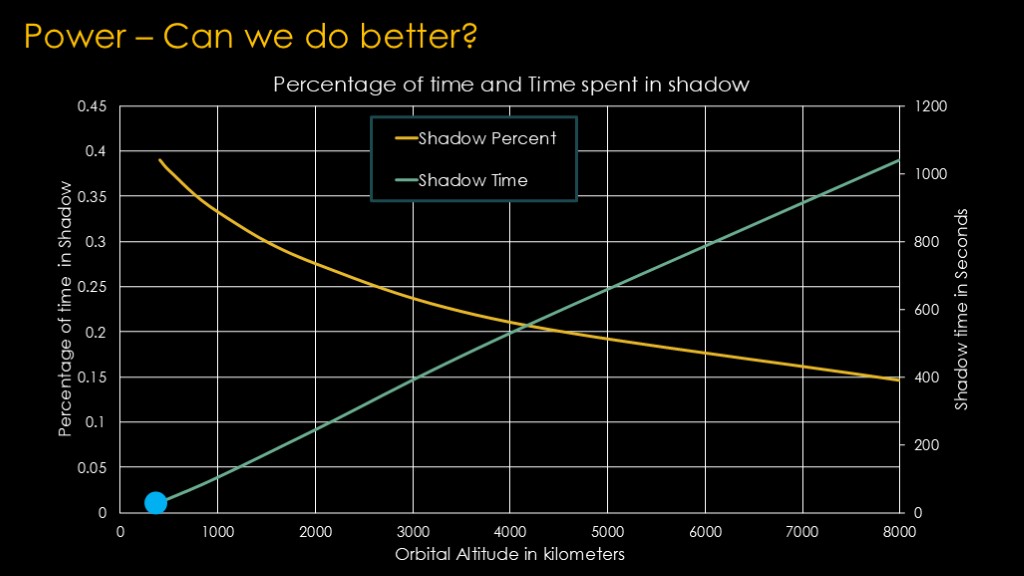

There's a significant issue we need to deal with. If our data center is in a 400 km orbit, it has an orbital period of 80 minutes and 39% of the time is spent in darkness. During that time, our solar panels produce no electricity, and that will be 31 minutes per orbit.

We need battery backup systems to provide 250 kilowatts of power for 31 minutes, which is roughly what these commercial systems can do. They're a bit bulky and they weigh about 11 tons.

This is our 250 kilowatt array, which provides us the power to run our data center, but it doesn't provide enough power to charge the batteries to keep it running during the night.

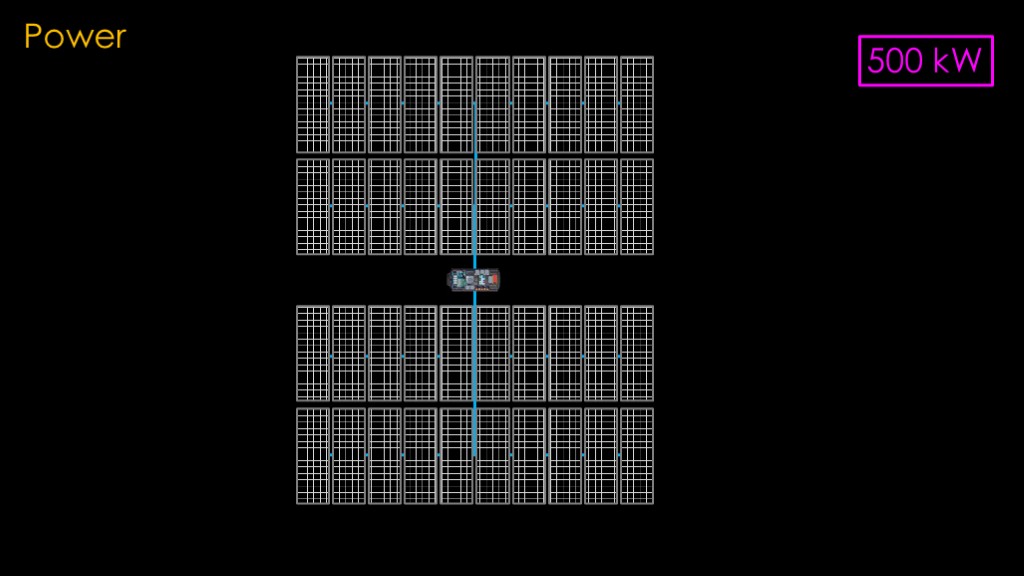

Let me introduce our 40 panel array that can generate 500 kilowatts of power.

The large number of panels plus the battery backup is unfortunate. Is there a way we could optimize them?

Higher orbits are wider orbits and that means less time in the earth's shadow.

If we go from 400 kilometers up to 2000 kilometers, we go from spending 37% of our time in shadow to 27% of our time in shadow. That seems like a good thing.

If we push out to 2000 kilometers, our *time* in shadow goes up to 245 minutes. The *percentage* of time spent in shadow has gone down, but the higher orbit has an orbital period that is 10 times longer, and that is what pushes the time in shadow up so significantly. If we want to keep the data center going during the shadow period, we need a battery that is 8 times larger. We can reduce the panel count by 20% because we have more time to charge in the slower orbit.

Not really an obvious win.

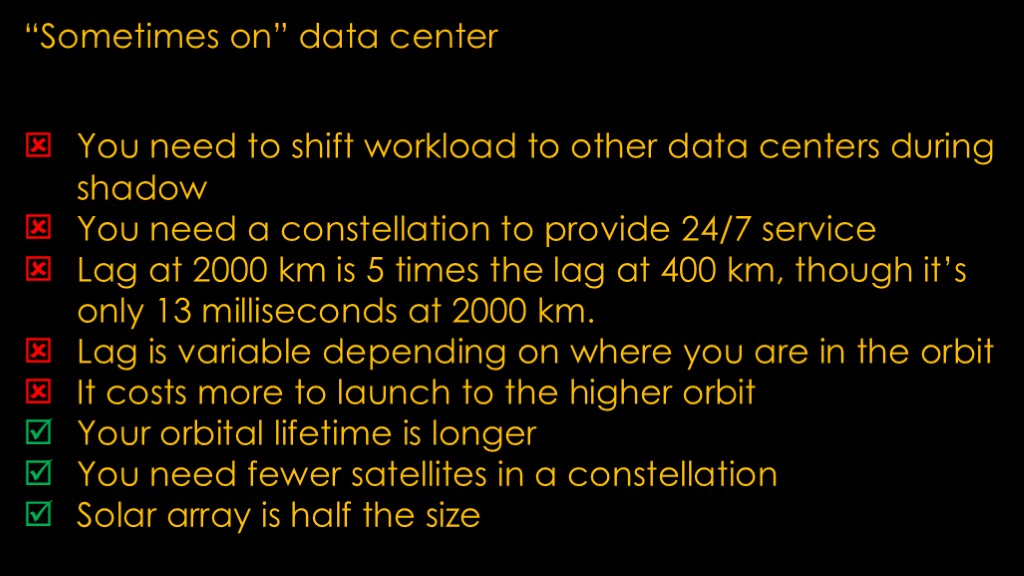

We could skip the batteries and go with an architecture without batteries and just shut down the data center during the shadow times.

If you do this:

(read)

We also need to provide temperature control, and we know that it is very cold in space...

Khan may have been a genetically engineered superhuman but that apparently left little time to study thermodynamics..

Spacecraft are surrounded by what we call a "VAC - UUM"

You are probably familiar with thermos bottles, which keep hot fluids hot and cold fluids cold by using a vacuum to separate the liquid from the outside environment. With the exception of the stopper to close the top of the bottle, the only way for heat to move between the inside and outside is by radiation across the vacuum.

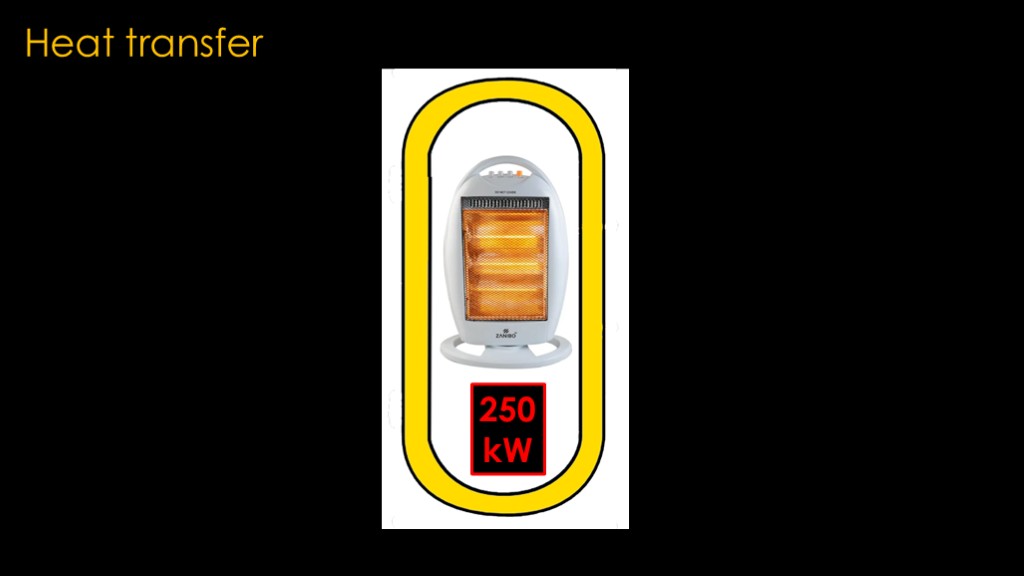

With our data center, you've closed off the stopper end of the thermos, used a better vacuum, and you stuffed a 250 kilowatt space heater inside.

Does that mean it will get too hot?

Yes, and we can guess how hot that will be...

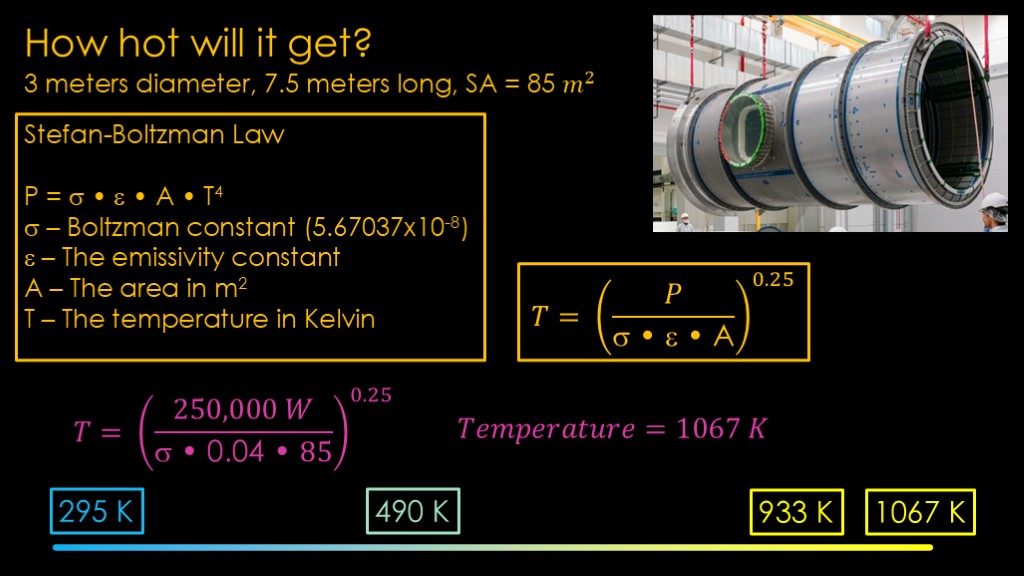

We know how much power is going into it, we know how much surface area the module has, and we know how good the aluminum is at emitting heat. We also remember the equations required to calculate the temperature.

We are hoping to keep our servers at about 72 degrees Fahrenheit, or 295 Kelvin . When we do the math, we find that the temp of our module will keep going up until it hits 1067 kelvin. Unfortunately, the aluminum in the module melts at 933 kelvin, so I think we have a bit of a problem.

That is, however, assuming polished aluminum which is very bad at radiating heat away. If we pick a coating that is much better, the estimated temperature drops down to 490 kelvin, a mere 880 degrees Fahrenheit or 217 degrees Celsius. This estimate ignores the heat that it will pick up from the sun, which is made worse by the coating that makes the module better at radiating heat away.

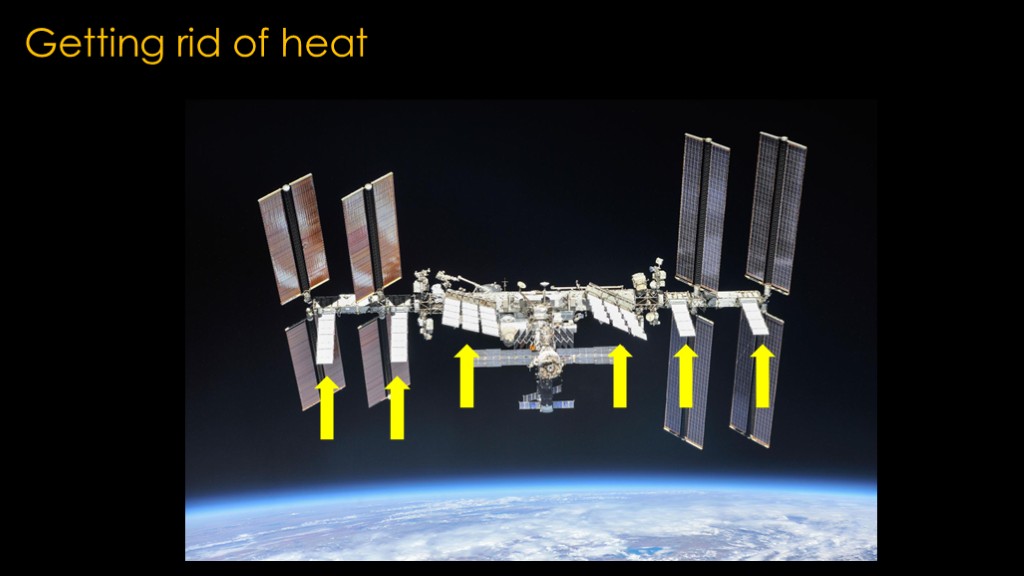

We need more surface area to get rid of our heat.

This is a common problem for spacecraft - it's hard to get rid of heat.

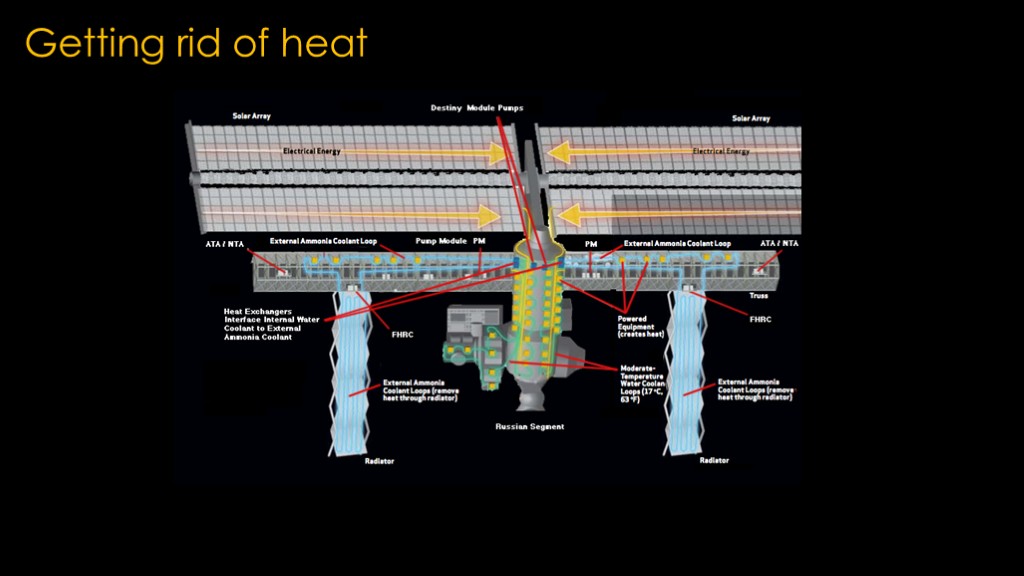

If we look at the ISS, It has two large radiator arrays to get rid of heat from the station, and it has small radiators to keep the power conversion electronics for each solar panel array cool.

Inside the space station, they use water to remove extra heat. Water is good because it can hold a lot of heat and is not hazardous if it spills.

It's not great at radiators because it can freeze in the space environment, and there is therefore a heat exchanger to transfer the heat to ammonia and that is pumped through the radiators.

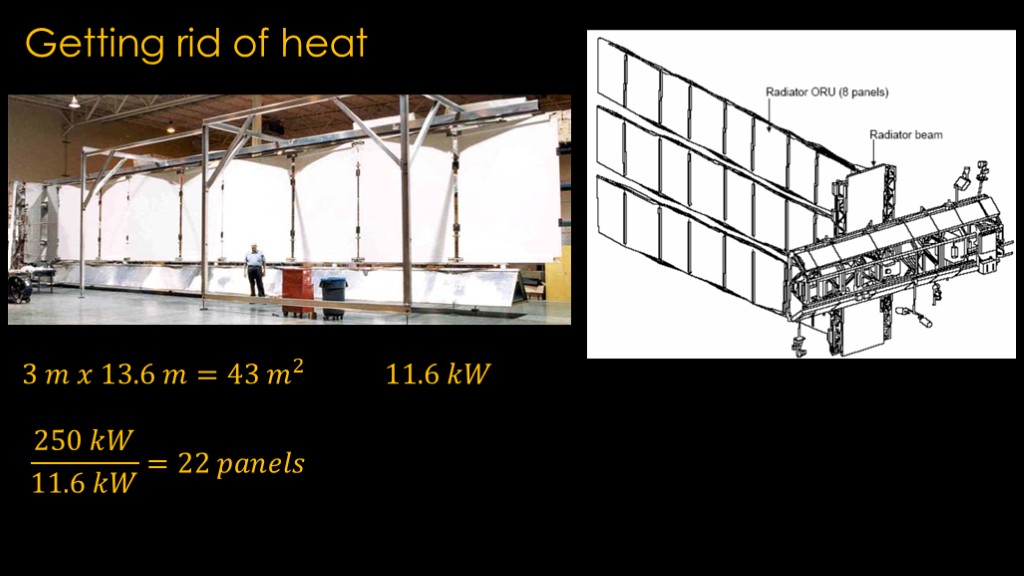

These are the radiators used on ISS.

Each wing of radiators is 3 meters by 13.6 meters or 43 square meters in area and can dissipate 11.6 kilowatts.

We need 250 kilowatts of dissipation, and that means 22 panels.

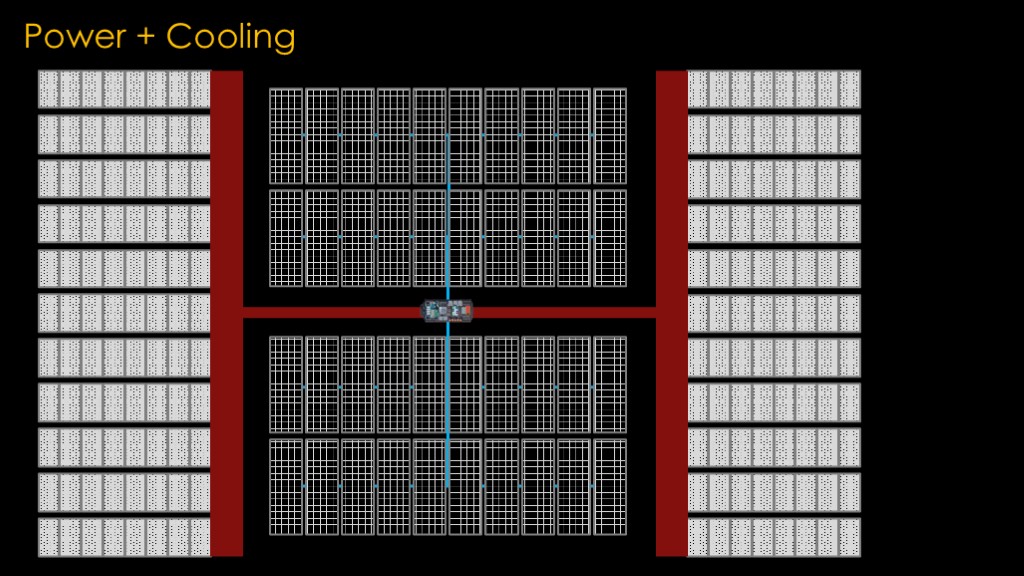

Here are the 22 radiator arrays that we need.

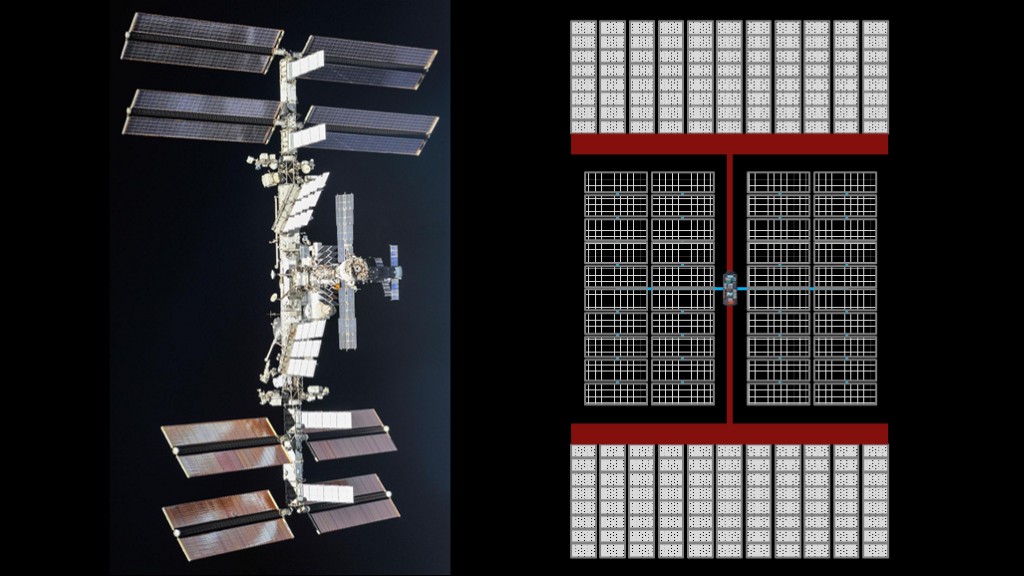

Our completed spacecraft is roughly the size of the completed ISS.

Can we reduce the radiator area?

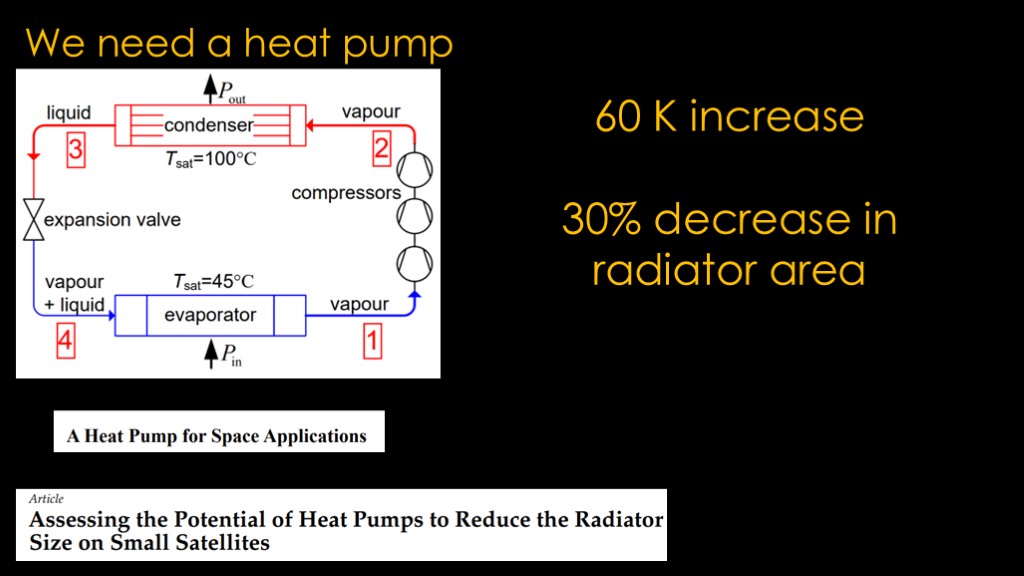

To convert a lot of moderately warm coolant to a smaller amount of hotter coolant for the radiator requires a heat pump.

This isn't a new idea; there have been a number of papers that looked at this, and they have nicely done the hard work for us.

There is no free lunch - to move the heat around requires compressors and those require power, and that's more power for your solar panels to provide and more heat to dissipate.

The conclusion in one paper was that a heat pump that gives you a 60 Kelvin increase in temperature - would give you a decrease in radiator area of 30%. Not quite the 50% that we were hoping for, but it would take the design from 22 radiators down to 16.

You will be trading off decreased radiator area for reduced manufacturing and launch cost to a heavier cooling system, perhaps more solar panels, and more complexity and decreased reliability in the cooling system.

Not really excited about this.

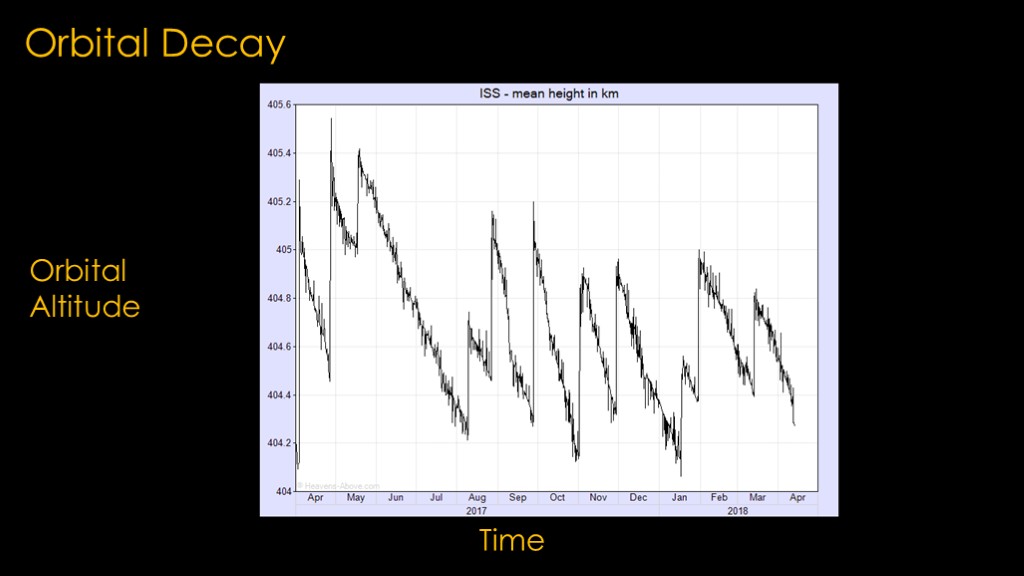

This chart shows the altitude of ISS in a 12 month period from April 2017 to April 2018.

During that time, the orbital altitude of the ISS had to be increased 9 times.

Our spacecraft is the same size but it has more drag, so we can expect that it's going to need ongoing reboost if it is in a 400 kilometer orbit

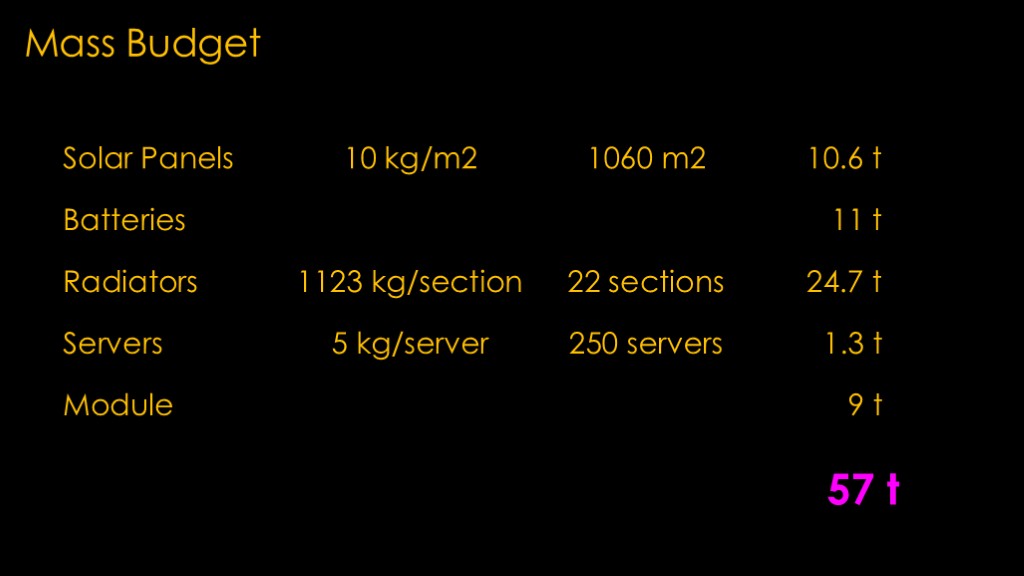

How much is this beast going to weight? Time for a poor estimate.

It's very hard to find numbers for deployed solar panels, but 10 kilograms per square meter is a reasonable ballpark estimate. That gives us a mass of about 10.6 tons for the solar panels.

The batteries are about 11 tons in commercially available systems.

The ISS Radiators are 1123 kilograms per section and we need 22 sections, so that's 24.7 tons.

I'm going to benchmark the servers themselves at 5 kilograms each, and with 250 we get 1.3 tons.

And I'm going to allocate another 9 tons for the module and everything else we need in it.

That gives us a total of 57 tons, and I'm sure there are parts that I forgot to include.

(move to a separate slide, show rockets).

The only operational launch system for that kind of mass is SLS, and it's going to be hard to schedule and pay for one of those.

The mass does fall into the published capability of Falcon Heavy, but unfortunately the payload adapter can't do more than 19 tons and the 3rd stage is sized for that that mass, not 50 tons.

New Glenn could maybe/sorta do it if it performs as well as it supposedly can, but reports are that it's heavier and lower performing than it is supposed to.

And of course, Starship would be an obvious choice.

Resize down to 100 servers and that pushes it down to 10 tons which a lot of launchers could handle.

But is 100 servers enough to do anything useful?

We need a rocket to launch this...

A fully expended Falcon Heavy springs to mind, as it can do 64 tons to low earth orbit. Except that it can't, because the second stage and payload adapter are pulled directly from the Falcon 9 and they top out at about 19 tons of payload. You could probably pay SpaceX to modify the third stage but it would not be cheap. And you would likely need a very big custom fairing.

SLS has plenty of oomph to do it, but you might need to do upper stage modifications and a new fairing, and it's a hard rocket to schedule and an even harder one to pay for.

Which leads us inevitably to starship, which would be a nice launcher for our orbital data center.

There is an alternative that might work a little better.

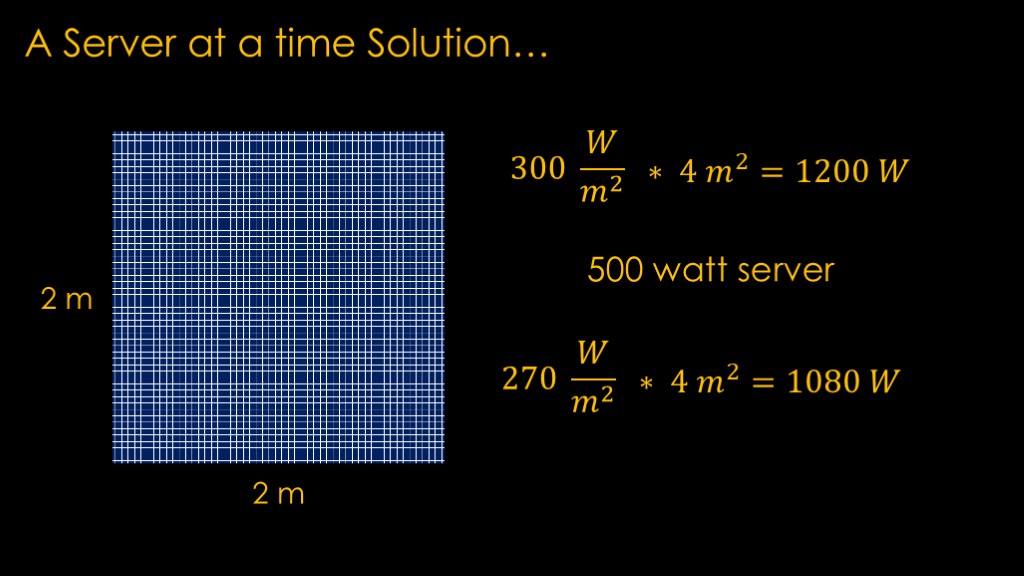

Let's take a solar panel that is two meters by two meters, and that gives us 4 square meters.

If we put some high efficiency solar cells that generate 300 watts per square meter, that gives us 1200 Watts of power.

That's probably enough to run a single 500 watt server and have enough spare power to charge batteries for the night time and run the other systems.

The server is mounted on the back of the solar cells. Attached to the back of the server is a radiator that can dissipate 270 watts per square meter, or 1080 watts total. That should be enough to dissipate the 500 watts from the server, and it might be enough to dissipate the heat gained by the solar cells.

We can probably get away without a big cooling system and we don't need electronics that can handle large amounts of power. You could just build a lot of these, attach them to a small spacecraft that can handle station keeping and orientation, and go from there.

This does give us an interesting benchmark for orbital data centers. We're inherently limited by the area it takes to generate power to run servers and radiate away excess heat, and the minimum area is on the order of 4 square meters.

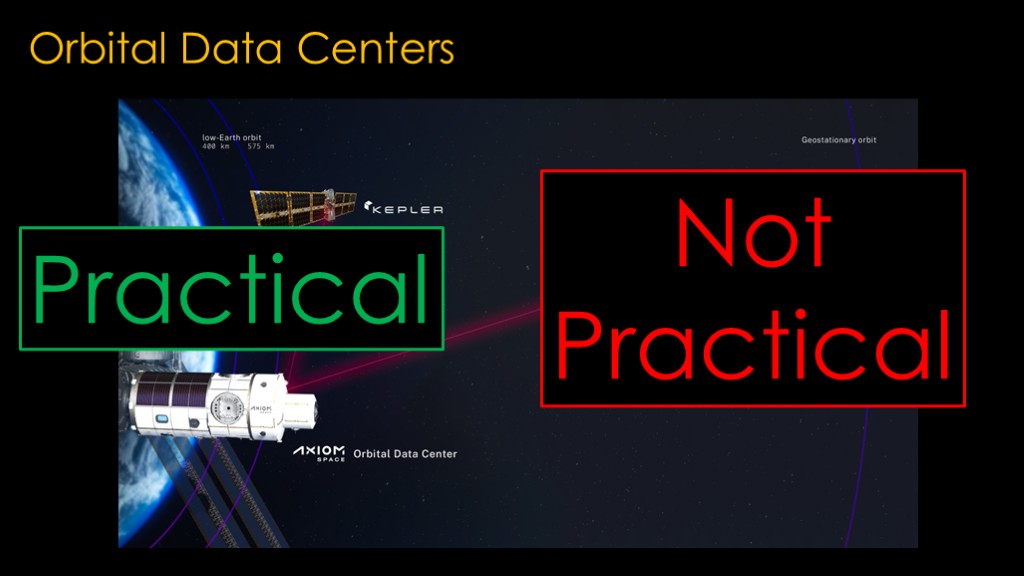

Practical or not practical? How do you vote?

The orbital data center is going to be very big and very complicated. You will spend a lot of time and money on design of the solar arrays, radiators, cooling systems, power systems, and the rest of the spacecraft. Then you have to pay for launch costs, operational costs on the ground to monitor it, and if it's in low earth orbit it's going to have a short lifetime.

The only benefit I see is that you aren't paying for the electricity that you use, but that's less than $100,000 a year for our 250 servers at commercial rates.

https://youtu.be/nO62scTZ7Qk?t=4